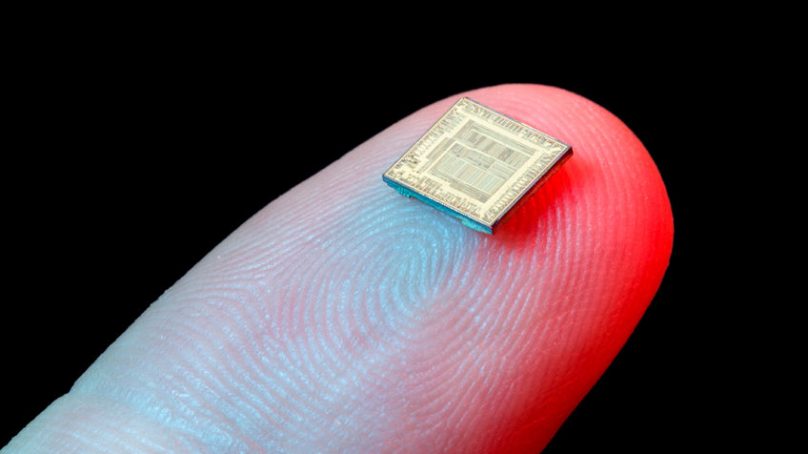

Implantable microchips in the Gates conspiracy can be traced to a Gates Foundation-funded paper published in 2019 by MIT researchers, who designed technology to record someone’s vaccination history in the skin like a tattoo.

It turned out to be the well from where many falsehoods sprung.

The tattoo ink would consist of tiny semiconductor particles called quantum dots whose glow could be read with a modified smartphone. There are no microchips and the quantum dots cannot be tracked or read remotely.

Yet the notion of implanting something to track vaccination status has been discussed. “It isn’t outlandish,” Johnson says. “It’s just outlandish to say it will then be used by Gates in some sinister way.”

What happens, Johnson explains, is that people pick nuggets of fact and stitch them together into a false or misleading narrative that fits their own worldview. These narratives then become reinforced in online communities that foster trust and thus lend credibility to misinformation.

Johnson and his colleagues track online discussion topics in social media posts. Using machine learning, their software automatically infers topics – say, vaccine side effects – from patterns in how words are used together. It’s similar to eavesdropping on multiple conversations by picking out particular words that signal what people are talking about, Johnson says.

And as in conversations, topics can evolve over time. In the past year, for example, a discussion about the March lockdowns mutated to include the US presidential election and QAnon conspiracy theories, according to Johnson. The researchers are trying to characterise such topic shifts, and what makes certain topics more evolutionarily fit and infectious.

Some broad narratives are especially tenacious. For example, Johnson says, the Gates microchip conspiracy contains enough truth to lend it credibility but also is often dismissed as absurd by mainstream voices, which feeds into believers’ distrust of the establishment.

Throw in well-intentioned parents who are sceptical of vaccines and you have a particularly persistent narrative. Details may differ, with some versions involving 5G wireless networks or radiofrequency ID tags, but the overall story – that powerful individuals want to track people with vaccines – endures.

And in online networks, these narratives can spread especially far. Johnson focuses on online groups, like public Facebook pages, some of which can include a million users. The researchers have mapped how these groups – within and across Facebook and five other platforms, Instagram, Telegram, Gab, 4Chan and a predominantly Russian-language platform called VKontakte – connect to one another with weblinks, where a user in one online group links to a page on another platform. In this way, groups form clusters that also link to other clusters.

The connections can break and relink elsewhere, creating complex and changing pathways through which information can flow and spread. For example, Johnson says, earlier forms of the Gates conspiracy were brewing on Gab only to jump over to Facebook and merge with more mainstream discussions about Covid-19 vaccinations.

These cross-platform links mean that the efforts of social media companies to take down election – or Covid-19-related misinformation are only partly effective. “Good for them for doing that,” Johnson says. “But it’s not going to get rid of the problem.”

The stricter policies of some platforms – Facebook, for example – won’t stop misinformation from spilling over to a platform where regulations are more relaxed.

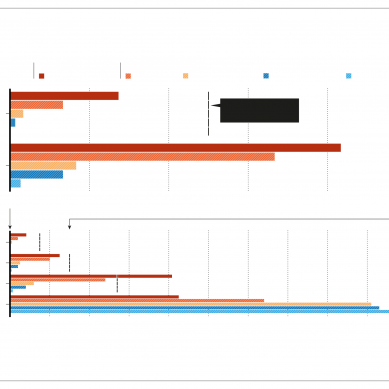

Researchers compared how often users of several social networks reacted to posts (e.g., liked, shared or commented) from unreliable to reliable sources. Users of the predominantly right-wing network Gab reacted to unreliable information 3.9 times as often as reliable information, on average.

In contrast, YouTube users mostly engaged with reliable information, while users of Reddit and Twitter fell in between. The way that misinformation spreads online seems to depend largely on the characteristics of a network and its users, the researchers say. (X axis shows the ratio of reactions to information from unreliable vs. reliable sources.)

And unless the entire social media landscape is somehow regulated, he adds, misinformation will simply congregate in more hospitable platforms. After companies like Facebook and Twitter started cracking down on election misinformation – even shutting down Trump’s accounts – many of his supporters migrated to platforms like Parler, which are more loosely policed.

To Johnson’s mind, the best way to contain misinformation may be by targeting these inter-platform links, instead of chasing down every article, meme, account or even page that peddles in misinformation — which is ultimately a futile game of Whac-A-Mole. To show this, he and his colleagues calculated a different R-value.

As before, their revised R-value describes the contagiousness of a topic, but it also incorporates the effects of dynamic connections in the underlying social networks. Their analysis is not yet peer-reviewed, but if it holds up, the formula can provide a mathematical way of understanding how a topic might spread – and, if that topic is rife with misinformation, how society can contain it.

For example, this new R-value suggests that by taking down cross-platform weblinks, social media companies or regulators can slow the transmission of misinformation so that it no longer spreads exponentially.

Once regulators identify an online group brimming with misinformation, they can then remove links to other platforms. This needs to be the priority, Johnson says, even more than removing the group pages themselves.

Fact-checking may also help, as some studies suggest it can change minds and even discourage people from sharing misinformation. But the impact of a fact-check is limited, because corrections usually don’t spread as far or as fast as the original misinformation, West says.

“Once you get something rolling, it’s real hard to catch up.” And people may not even read a fact-check if it doesn’t conform to their worldview, Quattrociocchi says.

Other approaches, such as improving education and media literacy and reforming the business model of journalism to prioritize quality over clicks, are all important for controlling misinformation – and, ideally, for preventing conspiracy theories from taking hold in the first place. But misinformation will always exist, and no single action will solve the problem, DiResta says.

“It’s more of a problem to be managed like a chronic disease,” she says. “It’s not something you’re going to cure.”

- A Knowable magazine report