The Federal Trade Commission (FTC) is considering a proposal that would allow businesses to use facial biometric scanning “age-estimation technology” to obtain parental permission for children under 13 to use online gaming services.

Yoti, a digital identity (ID) company, along with Epic Games, a subsidiary of the parental-consent management firm SuperAwesome, and the Entertainment Software Rating Board (ESRB) submitted the proposal.

They are requesting approval for “Privacy-Protective Facial Age Estimation” technology under the Children’s Online Privacy Protection Act (COPPA). Under COPPA, online sites and services “directed to children under 13” must obtain parental consent before collecting or using personal information from a child.

The law includes several “acceptable methods for gaining parental consent” and provisions “allowing interested parties to submit new verifiable parental consent methods” for approval. The FTC’s public comment period for the proposal ends August 21.

In Kenya, an unregistered American tech company was in the first week of August stopped in its tracks as it lured unemployed youth to give their facial biometrics in exchange for Ksh7,000 ($50).

In the US, the companies backing the proposal claim the technology obtains only portions of a facial image and the data are immediately deleted once the verification process has been completed. However, privacy activists who spoke with The Defender said the proposal does not sufficiently address a number of privacy concerns associated with the technology.

Some experts also raised questions about the implications and potential misuses of facial recognition technology more broadly.

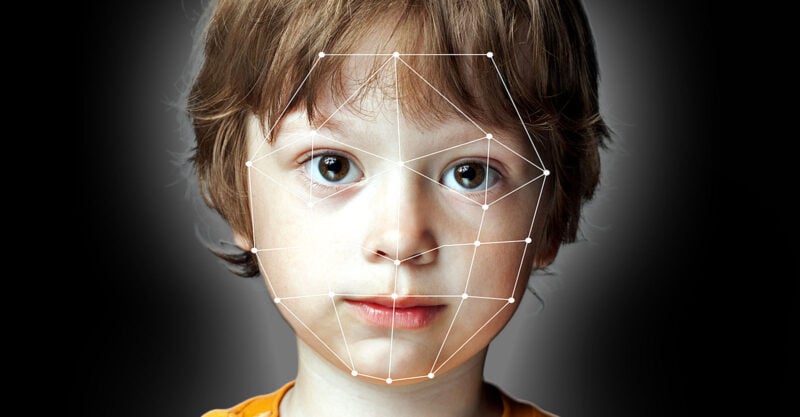

“Privacy-Protective Facial Age Estimation” technology “analyses the geometry of a user’s face to confirm that they are an adult,” according to the FTC. According to the proposal submitted by the three applicants: “Privacy-Protective Facial Age Estimation provides an accurate, reliable, accessible, fast, simple and privacy-preserving mechanism for ensuring that the person providing consent is the child’s parent.”

The proposal compares the technology to existing verification methods: “Facial age estimation uses computer vision and machine learning technology to estimate a person’s age based on analysis of patterns in an image of their face. The system takes a facial image, converts it into numbers, and compares those numbers to patterns in its training dataset that are associated with known ages.

“By contrast, facial recognition technology, which seeks to identify a specific person based on a photo, looks for unique geometric measures of the face, such as the distance and relationship among facial features, and tries to match these to an existing unique set of measurements already recorded in a database along with unique identifying information.”

The applicants said their proposed technology draws upon a “neural network model by feeding it millions of images of diverse human faces with their actual month and year of birth.”

“The system converts the pixels of these images into mathematical functions that represent patterns. Over time, the system has learned to correlate those patterns with the known age,” the applicants stated.

Citing a Yoti white paper, the proposal explains how these images are then processed: “When performing a new age estimation, the system extracts the portions of the image containing a face, and only those portions of the image are analysed for matching patterns.

“To match patterns, each node in Yoti’s neural network performs a mathematical function on the pixel data and passes the result on to nodes in the next layer, until a number finally emerges on the other side. The only inputs are pixels of the face in the image, and the only outputs are numbers. Based on a review of the number patterns, the system creates an age estimation.”

According to the proposal, the user takes a photo of themselves (a selfie) assisted by an “auto face capture module” that guides the positioning of their face in the frame. The system then checks whether there is a live human face in the frame and requires the image to be captured in the moment.

The system doesn’t accept still images, and photos that don’t meet the quality requirements are rejected. “These factors minimise the risk of circumvention and of children taking images of unaware adults,” according to the proposal.

Julie Dawson, chief policy and regulatory officer for Yoti, told Biometric Update the software “is privacy-preserving, accurate, and easier to use by more parents” compared to existing alternatives.

“In addition, we have completed over four million age estimations for SuperAwesome outside the US – a sign of its popularity among parents. Where facial age estimation is available as an option for parental consent outside the US, more than 70 per cent of parents choose it over other methods,” she stated.

According to Biometric Update, “SuperAwesome and Yoti have worked together on the software and began marketing it outside the US, beginning last year. … It’s reportedly 99.97 per cent accurate in identifying an adult.”

But the companies also concede that “a third of people in the European Union and the United Kingdom to date have been blocked by facial age estimation,” leading to questions about the true accuracy of the technology. And a February 2022 Sifted article states that the technology “is usually able to accurately estimate age to within a four to six-year range,” and “as recently as 2018, the accuracy wasn’t where it should be on darker skin tones.”

According to SuperAwesome’s website, the “Personally identifiable information” collected by the technology “includes name, address, geolocation, email address or other contact information, user-generated text or photo/video, and persistent identifiers (like device IDs and IP addresses), many of which are necessary for basic product features.”

Despite assurances that no personal information is collected, just portions of one’s facial image, and that the data are immediately deleted, the website also states: “To deliver great online experiences for young audiences, many digital services – including games, educational platforms and social communities – benefit from the use of personal information to power key features or to enable personalisation.”

What appears to be unaddressed by these statements is the possibility that children may attempt to use the age verification system anyway, in addition to the possibility that whatever images are collected could nevertheless be connected to other personal information entered by children or parents as part of an online registration or purchase.

It also is unclear how the software, aside from verifying that the individual whose face is being scanned is an adult, can confirm that they are also a parent or legal guardian of that child, and not some other unrelated individual.

Privacy and legal experts who spoke with The Defender wondered how personally identifiable information, as well as IP addresses and geolocation data, might be collected, despite assurances to the contrary, and how that information might be used, intentionally or inadvertently.

Greg Glaser, an attorney based in California, told The Defender the proposal is “dystopian.” He said: “Your human face data is inherently identified with you, meaning if someone sees your face, they know who you are even if they don’t know your name. Because your human face image cannot be de-identified for privacy law purposes, tech companies need government authorisation to collect and use the data for new uses (or mechanisms) under current privacy law.

“This FTC action under COPPA is another step in the direction of real-time facial recognition monitoring. The companies claim they need to privately examine your child’s face and body (yes, the body is viewable with the face) in order to enhance security, but it’s a dystopian solution in search of a de minimis problem.”

- The Defender report / By Dr Michael Nevradakis, senior reporter for The Defender