In Belgrade’s Republic Square, dome-shaped cameras hang prominently on wall fixtures, silently scanning people walking across the central plaza.

It is one of 800 locations in the city that Serbia’s government said last year it would monitor using cameras equipped with facial-recognition software, purchased from electronics firm Huawei in Shenzhen, China.

The government didn’t ask Belgrade’s residents whether they wanted the cameras, says Danilo Krivokapić, who directs a human-rights organisation called the SHARE Foundation, based in the city’s old town.

This year, it launched a campaign called Hiljade Kamera — ‘thousands of cameras’ — questioning the project’s legality and effectiveness and arguing against automated remote surveillance.

Belgrade is experiencing a shift that has already taken place elsewhere. Facial-recognition technology (FRT) has long been in use at airport borders and on smartphones and as a tool to help police identify criminals. But it is now creeping further into private and public spaces.

From Quito to Nairobi, Moscow to Detroit, hundreds of municipalities have installed cameras equipped with FRT, sometimes promising to feed data to central command centres as part of ‘safe city’ or ‘smart city’ solutions to crime. The Covid-19 pandemic might accelerate their spread.

The trend is most advanced in China, where more than 100 cities bought face-recognition surveillance systems last year, according to Jessica Batke, who has analysed thousands of government procurement notices for ChinaFile, a magazine published by the Centre on US-China Relations in New York City.

But resistance is growing in many countries. Researchers, as well as civil-liberties advocates and legal scholars are among those disturbed by facial recognition’s rise. They are tracking its use, exposing its harms and campaigning for safeguards or outright bans.

Part of the work involves exposing the technology’s immaturity: it still has inaccuracies and racial biases. Opponents are also concerned that police and law-enforcement agencies are using FRT in discriminatory ways and that governments could employ it to repress opposition, target protesters or otherwise limit freedoms — as with the surveillance in China’s Xinjiang province.

Legal challenges have emerged in Europe and parts of the United States, where critics of the technology have filed lawsuits to prevent its use in policing.

Many US cities have banned public agencies from using facial recognition — at least temporarily — or passed legislation to demand more transparency on how police use surveillance tools.

Europe and the United States are now considering proposals to regulate the technology, so the next few years could define how FRT’s use is constrained or entrenched.

“What unites the current wave of pushback is the insistence that these technologies are not inevitable,” wrote Amba Kak, a legal scholar at New York University’s AI Now Institute, in a September report on regulating biometrics.

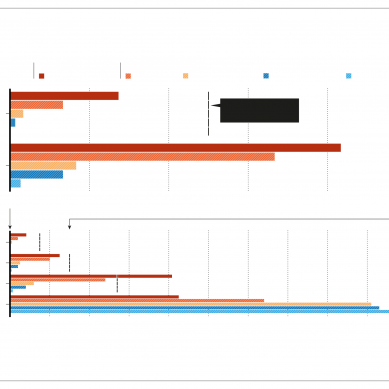

By 2019, 64 countries used FRT in surveillance, says Steven Feldstein, a policy researcher at the Carnegie Endowment for International Peace in Washington DC, who has analysed the technology’s global spread.

Feldstein found that cities in 56 countries had adopted smart-city platforms. Many of them purchased their cameras from Chinese firms, often apparently encouraged by subsidised loans from Chinese banks. (US, European, Japanese and Russian firms also sell cameras and software, Feldstein noted.)

Belgrade’s project illustrates concerns that many have over the rise of smart-city systems: there is no evidence that they reduce crime more than ordinary video cameras do, and the public knows little about systems that are ostensibly for their benefit.

Krivokapić says he is worried that the technology seems more suited to offering an increasingly authoritarian government a tool to curb political dissent.

“Having cameras around in a young democracy such as Serbia can be problematic because of the potential for political misuse,” says Ljubiša Bojić, coordinator of the Digital Sociometrics Lab at the University of Belgrade, which studies the effects of artificial intelligence (AI) on society.

When the government announced the project, it gave few details. But SHARE found a 2018 press release on Huawei’s website (which the firm deleted) that announced tests of high-definition cameras in Belgrade.

After SHARE and others pressed for more details, the Serbian government said that data wouldn’t be collected or kept by Huawei. But Lee Tien, a senior staff attorney at the Electronic Frontier Foundation in San Francisco, California, says that one of the main reasons large technology firms — whether in China or elsewhere — get involved in supplying AI surveillance technology to governments is that they expect to collect a mass of data that could improve their algorithms.

Serbia models its data-protection laws on the European Union’s General Data Protection Regulation (GDPR), but it is unclear whether the interior ministry’s plans satisfy the country’s laws, Serbia’s data-protection commissioner said in May. (The interior ministry declined to comment for this article, and Huawei did not respond to questions.

Overall, there haven’t been studies proving that ‘safe’ or ‘smart’ cities reduce crime, says Pete Fussey, a sociologist at the University of Essex in Colchester, UK, who researches human rights, surveillance and policing. Fussey says anecdotal claims are being leveraged into a proof of principle for a surveillance technology that is still very new.

In March, Vladimir Bykovsky, a Moscow resident who’d recently returned from South Korea, left his apartment for a few moments to throw out his rubbish. Half an hour later, police were at his door. The officers said he had violated Covid-19 quarantine rules and would receive a fine and court date. Bykovsky asked how they’d known he’d left. The officers told him it was because of a camera outside his apartment block, which they said was connected to a facial-recognition surveillance system working across the whole of Moscow.

“They said they’d received an alert that quarantine had been broken by a Vladimir Bykovsky,” he says. “I was just shocked.”

The Russian capital rolled out a city-wide video surveillance system in January, using software supplied by Moscow-based technology firm NtechLab. The firm’s former head, Alexey Minin, said at the time that it was the world’s largest system of live facial recognition. NtechLab co-founder Artem Kukharenko says it supplies its software to other cities, but wouldn’t name locations because of non-disclosure agreements.

Asked whether it cut down on crime, he pointed to Moscow media reports of hooligans being detained during the 2018 World Cup tournament, when the system was in test mode. Other reports say the system spotted 200 quarantine breakers during the first few weeks of Moscow’s Covid-19 lockdown.

Like Russia, governments in China, India and South Korea have used facial recognition to help trace contacts and enforce quarantine; other countries probably have, too.

Researchers worry that the use of live-surveillance technologies is likely to linger after the pandemic. This could have a chilling effect on societal freedoms. Last year, a group set up to provide ethical advice on policing asked more than 1,000 Londoners about the police’s use of live facial recognition there; 38per cent of 16-24-year-olds and 28per cent of Asian, Black and mixed-ancestry people surveyed said they would stay away from events monitored with live facial recognition.

Many researchers, and some companies, including Google, Amazon, IBM and Microsoft, have called for bans on facial recognition — at least on police use of the technology — until stricter regulations are brought in.

When it comes to commercial use of facial recognition, some researchers worry that laws focused only on gaining consent to use it aren’t strict enough, says Woodrow Hartzog, a computer scientist and law professor at Northeastern University in Boston, Massachusetts, who studies facial surveillance. It’s very hard for an individual to understand the risks of consenting to facial surveillance, he says. And they often don’t have a meaningful way to say ‘no’.

The Algorithmic Justice League, a researcher-led campaigning organization founded by computer scientist Joy Buolamwini at the Massachusetts Institute of Technology in Cambridge, has been prominent in calling for a US federal moratorium on facial recognition.

In 2018, Buolamwini co-authored a paper showing how facial-analysis systems are more likely to misidentify gender in darker-skinned and female faces. And in May, she and other researchers argued in a report that the United States should create a federal office to manage FRT applications — rather like the US Food and Drug Administration approves drugs or medical devices.

- A Nature magazine report